This article is based on a presentation at Womble Bond Dickinson’s AI Intensive: Playbook for Innovation and Risk Mitigation virtual summit, which took place May 20, 2025. The discussion included Womble Bond Dickinson Partners John Gray and Kyle Kessler.

Welcome to the AI Era

While many systems that are described as AI have been around for decades (e.g., internet search engines), today’s AI tools are much more powerful and are widely accessible. Generative AI and agentic AI extend the power of artificial intelligence into new areas. Even self-driving cars are now increasingly common.

“Many analysts are predicting continued exponential growth for the generative AI market, including in the healthcare, pharma/biotech, and manufacturing sectors,” Gray said. And many AI-related issues are top of mind for businesses and other organizations across all sectors of the economy.

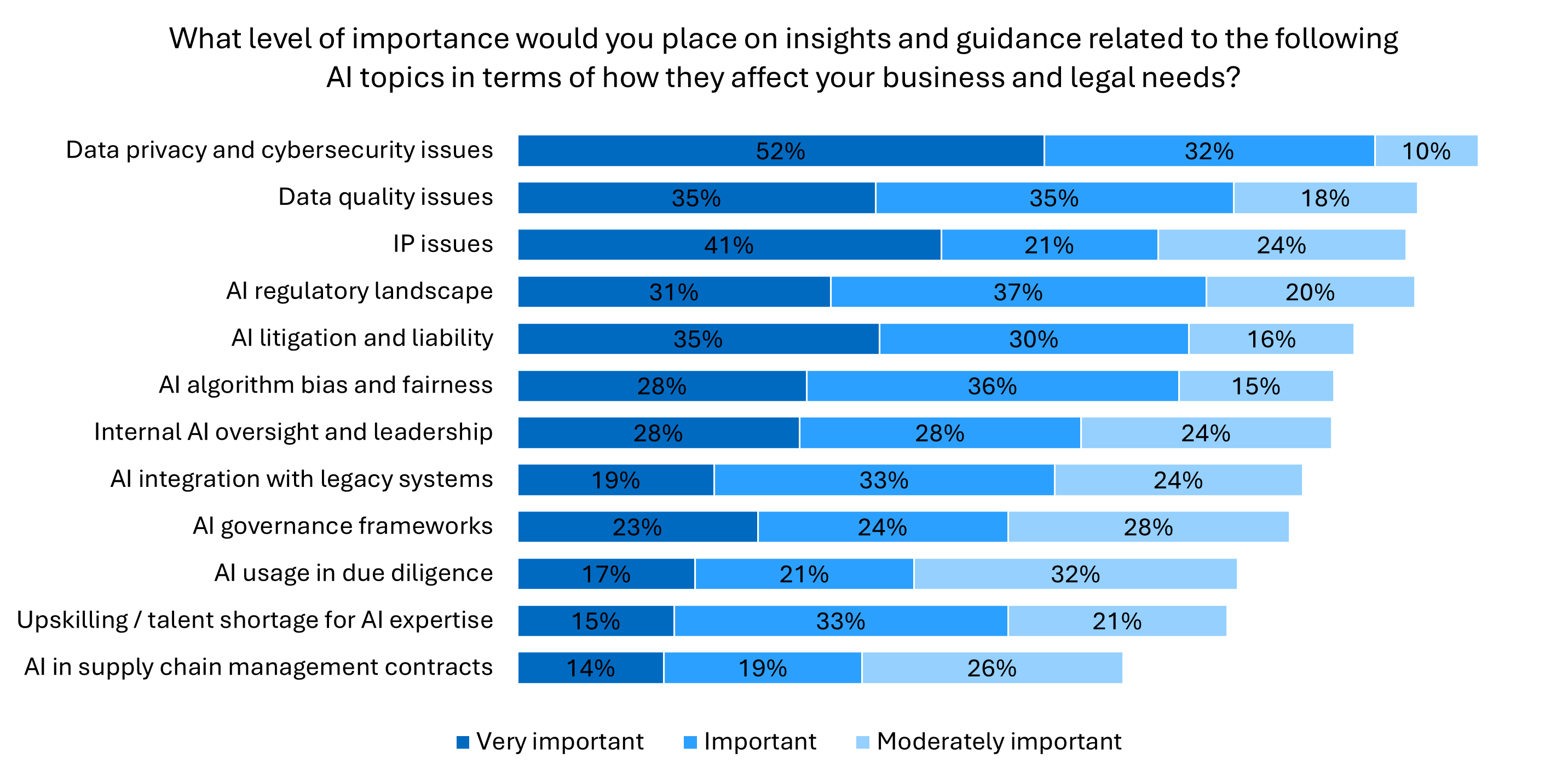

Indeed, a January 2025 Womble Bond Dickinson client survey revealed eight different AI issues that more than 50% of respondents identified as either important or “very important” to their respective business and legal needs. Chief among those was data privacy and cybersecurity, which ninety-four percent of respondents identified as “very important,” “important,” or “moderately important.”

Gray noted that the survey results also line up with recent points of emphasis for legislators and regulators—namely:

1. Data breaches and nefarious uses of stolen data (with AI allowing threat actors to enhance malware tools and improve their scams);

2. Privacy harms to people whose data might be exposed through various uses or misuses of AI; and

3. Biases built into or resulting from AI usage.

Gray also noted that these concerns are unlikely to dissipate as technologies continue to evolve and the AI Era advances.

Comprehensive State Privacy Laws

With the continued advances and expanded availability of AI and other technologies, Kessler explained: “We have a more sophisticated consumer base that demands more, not just from businesses but from regulators to step in and protect their data.”

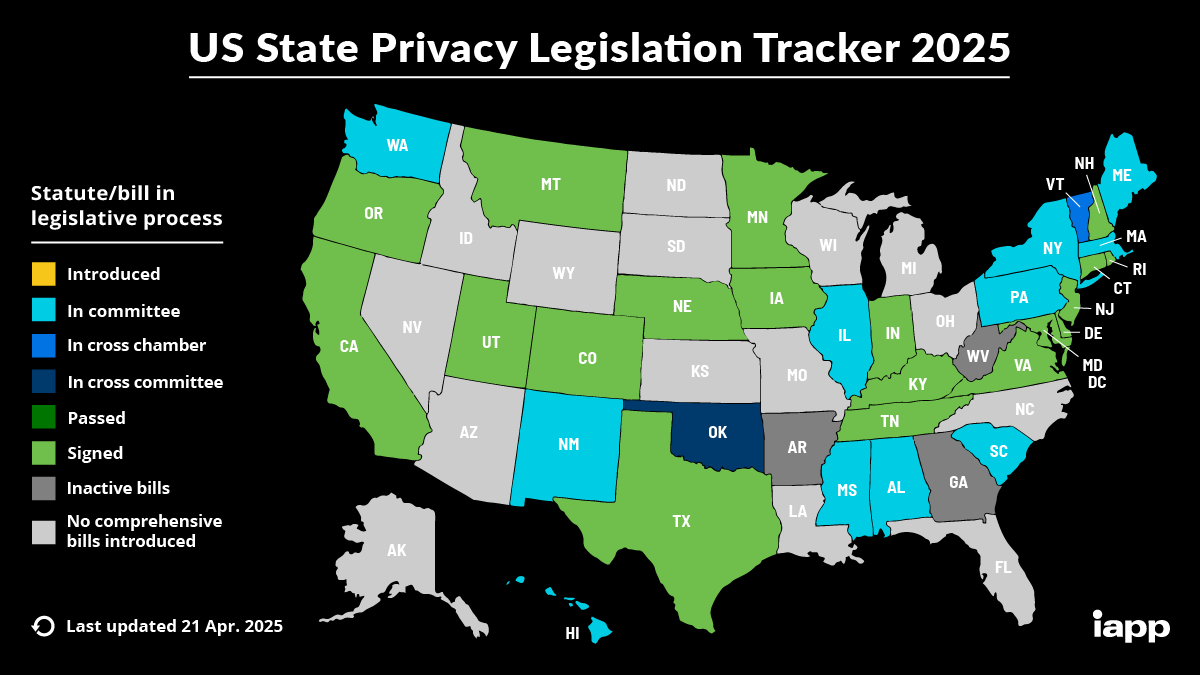

This has led to a swath of state privacy laws, all of which have implications for AI, and some of which address AI expressly. Kessler pointed to 19 comprehensive state privacy laws that have been passed thus far. All of those laws will be in effect by 2026, and many include significant financial penalties for violations.

Kessler noted that, like the EU’s General Data Protection Regulation (GDPR), the California Consumer Privacy Act (CCPA) applies not just to traditional “consumer” data but also to business-to-business (B2B) data, as well as employee and job-applicant data. Generally speaking, though, consumer protection is the key emphasis of these state privacy laws. And consumers or other covered persons typically have some or all of the following rights:

- The right to delete;

- The right to access/data portability;

- The right to amend/correct data;

- The right to limit use of sensitive personal information;

- The right to opt out of sales or sharing (this includes sharing of personal information like IP address and session or browser information collected from pixels, cookies, or other online trackers for targeted or behavioral advertising and certain types of analytics);

- The right to use Global Privacy Controls / Universal Opt-Out Mechanisms (where browser can send a signal to a website not to track the visitor so no trackers fire from the moment the person lands on the site); and

- The right to be free from discrimination or retaliation for exercising these rights.

These rights generally apply to AI and non-AI technologies alike, but some laws give consumers additional rights specific to AI or automated decision-making technologies (ADMT). The CCPA, for example, through regulations currently in draft form, will give consumers the right to notice regarding certain uses of ADMT, the right to opt out of certain ADMT uses, and the right to access certain information about ADMT.

Enforcement Trends

In light of the various laws across the country, requirements and areas of enforcement vary from state to state, and much of the enforcement activity thus far hasn’t focused specifically on AI. Nonetheless, Gray said, recent enforcement activity gives an idea as to what regulators are looking for, and “the regulatory risks are truly massive.” Indeed, California, Texas, Virginia, and New Hampshire now have dedicated privacy enforcement units, and settlements and monetary penalties have involved millions of dollars.

But perhaps the biggest threat to businesses is algorithmic disgorgement. That remedy, which has been imposed by the FTC at least five times, requires companies to delete algorithms or AI models used to collect and analyze illegally obtained data. “The FTC is saying, ‘Look, this is a massive harm to consumers. You’re going to have to get rid of the algorithms used to process this illegally gotten data,’” Kessler said.

More broadly, the FTC and state regulatory enforcement actions remain focused on unfair and deceptive practices employed by website and app operators with respect to online trackers, opt outs, and dark patterns. And regulators have encouraged transparency and disclosure across the board.

AI-Related Litigation Trends

Just as there has been a rapid rise in AI usage, there has also been a rapid increase in demand letters, arbitration filings, and putative class-action litigation associated with the use of online trackers. Common website functionality involved in the alleged claims include online tracking pixels, cookies, and similar tools; AI chatbots; session replay tools (which often utilize AI in some way); and embedded video content with trackers.

Gray noted that, although claims vary by state, plaintiffs are frequently using the California Invasion of Privacy Act and other (often decades-old) wiretapping laws as the basis for their claims and to seek statutory damages and attorney fees. Generally, these claims involve allegations that tracking technologies are used to surreptitiously record a user’s interactions with websites and/or as a “pen register” or “trap and trace” device.

In addition, Plaintiffs are bringing claims under a number of other privacy-related statutes, including the Song-Beverly Act in California. That law (which has similar counterparts in at least 14 other states) was designed to protect consumers from disclosing personal information at the checkout counter, and plaintiffs have asserted that it applies to certain data-collection practices associated with online checkouts.

The federal Video Privacy Protection Act (VPPA) also remains a popular basis for claims. That law, passed in 1988, generally prohibits the disclosure of video rental records containing personally identifiable information. And claimants have alleged that the use of tracking technologies on websites with embedded videos violates the VPPA by collecting and sharing certain information relating to their viewing history.

Of course, “AI only exacerbates a number of these issues,” Gray said, and “you want to take mitigation steps to protect yourself from these types of claims.” Moreover, many cyber-insurance applications have been updated to include a specific section of questions about these types of demands and whether the applicant has been involved in pixel litigation, which can raise the costs of doing business.

Minimizing Risk

What all of this means for emerging AI is that developers and commercial users should consider (and may be required to implement) comprehensive governance programs, particularly for high-risk uses, and thorough risk assessments before deployment. And, although there aren’t a lot of AI-specific laws in place yet, companies should consider how AI intersects with privacy. “Enforcement actions in terms of privacy laws and AI go hand-in-hand,” Kessler said. “It’s [therefore] important to consider, on a case-by-case basis, how [organizations] are processing data.”

More specifically, organizations should consider:

- Strategically revising their terms of use / terms and conditions for their websites to minimize disputes and liability;

- Regularly evaluating privacy-notice disclosures to ensure transparency;

- Auditing whether preference tools are working properly;

- Updating data-processing/protection agreements with vendors; and

- Implementing cookie banners that display on landing and disclose (or obtain affirmative consent for) any tracking technologies, and/or simply removing tracking tools or video content from websites if not core to business objectives.

In sum, AI technologies, comprehensive privacy laws and regulations, and privacy-related enforcement and litigation are all becoming increasingly prevalent. As a result, organizations should continue to assess their practices and potential risks with respect to privacy and AI, and, where possible, they should consider and take steps to mitigate those risks.

[View source.]